|

Henghui Bao I'm a master student majoring Computer Science at University of Southern California(USC), advised by Yue Wang and Prof. Laurent Itti, and I have also had the privilege of working under the guidance of Prof. Jiayuan Gu. My research interests include: 3D Computer Vision, Vision for Robotics. I am interested in robust robot learning with minimal human supervision and generating photorealistic 3D environments from visual data to enhance simulation and real-world applications. |

|

Research |

|

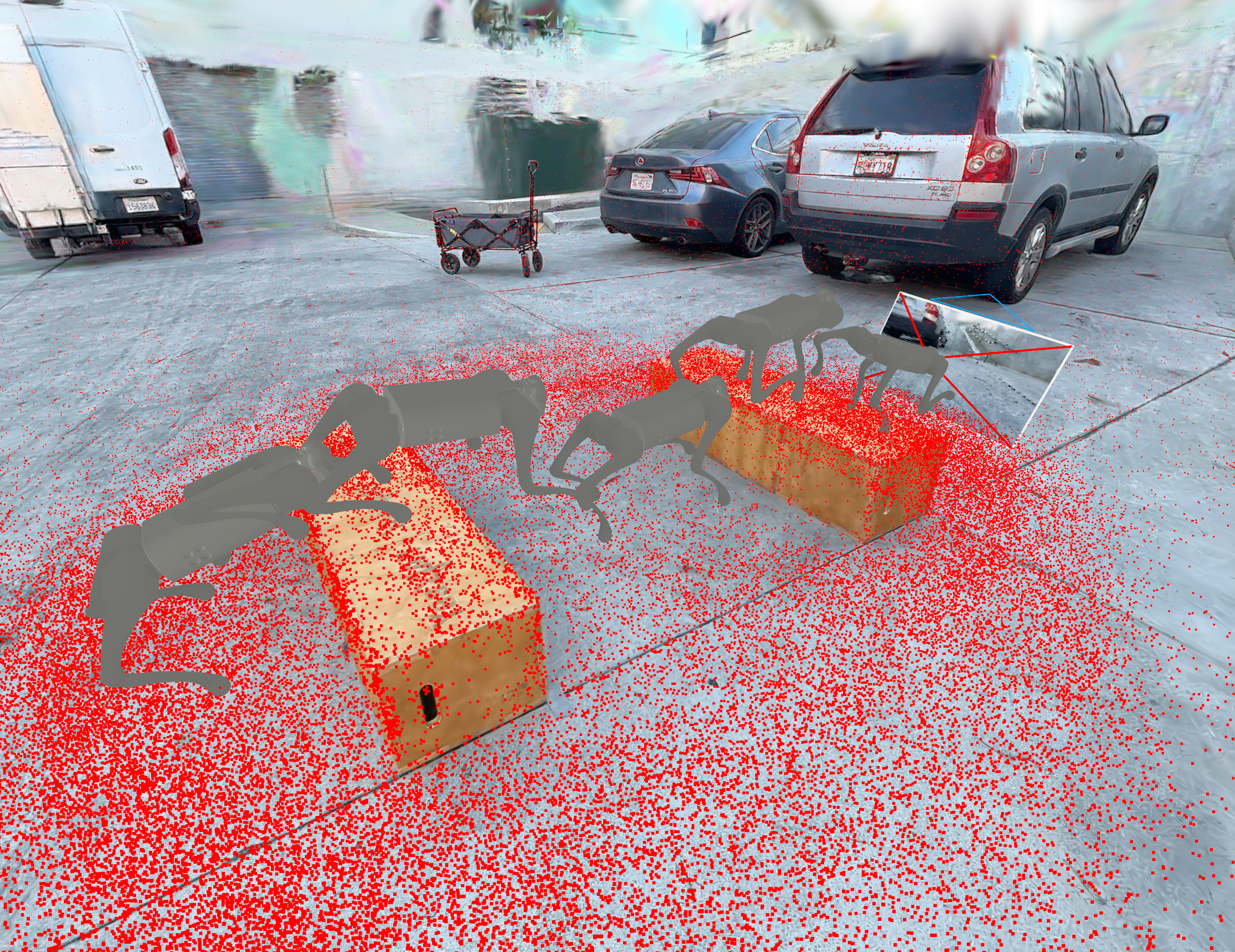

The Neverwhere Visual Parkour Benchmark Suite

Ziyu Chen, Henghui Bao*, Haoran Chang, Alan Yu, Ran Choi, Kai McClennen, Gio Huh, Kevin Yang, Ri-Zhao Qiu, Yajvan Ravan, John J. Leonard, Xiaolong Wang, Phillip Isola, Ge Yang†, Yue Wang† Under Review, 2025 |

|

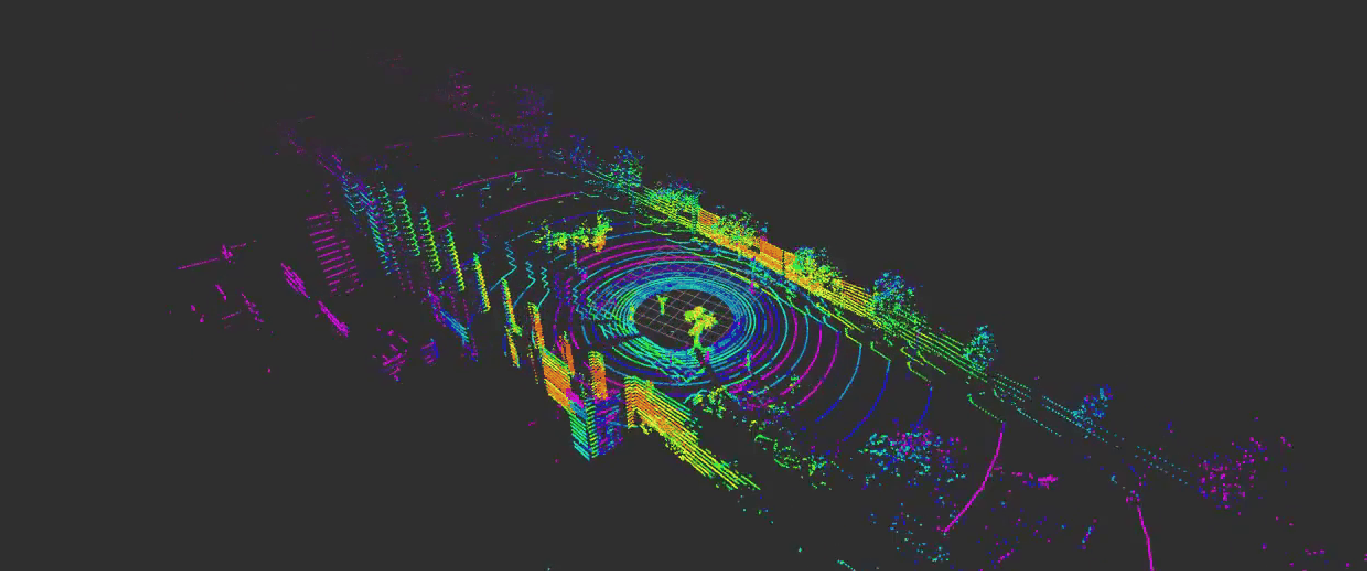

USCILab3D: A Large-scale, Long-term, Semantically Annotated Outdoor Dataset

Kiran Lekkala*, Henghui Bao*, Peixu Cai, Wei Zer Lim, Chen Liu, Laurent Itti NeurIPS Datasets and Benchmarks Track, 2024 USCILab3D is a densely annotated outdoor dataset of 10M images and 1.4M point clouds captured over USC's 229-acre campus, offering multi-view imagery, 3D reconstructions, and 267 semantic categories for advancing 3D vision and robotics research. |

|

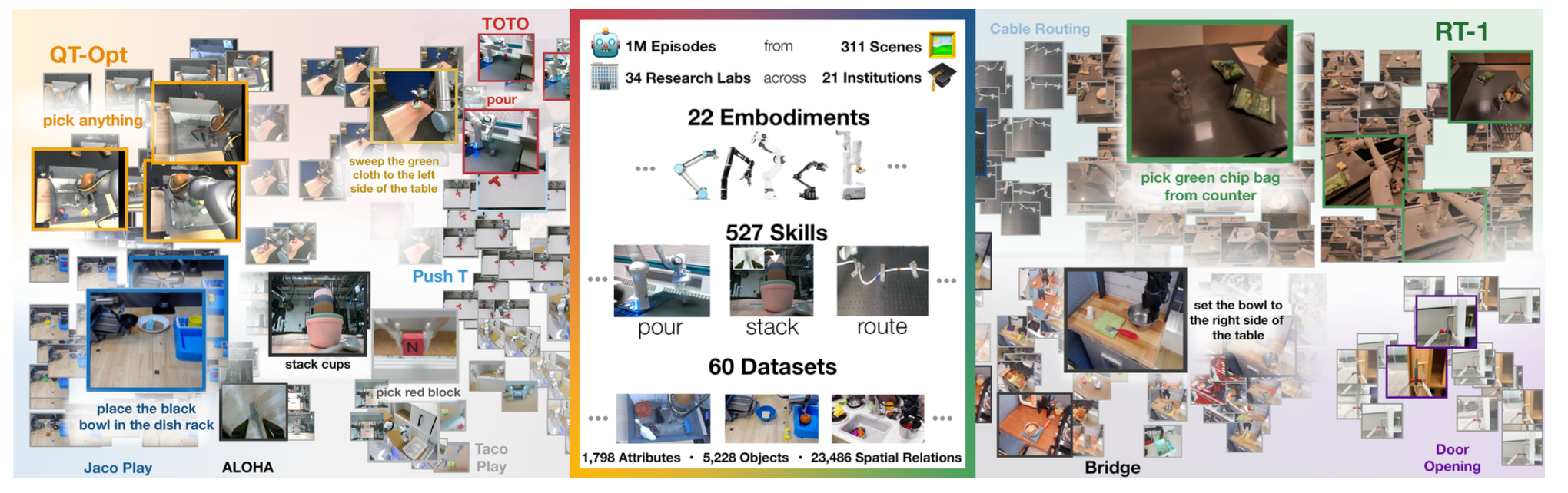

Open X-Embodiment: Robotic Learning Datasets and RT-X Models

Open X-Embodiment Collaboration IEEE International Conference on Robotics and Automation (ICRA) (Best Conference Paper Award) |

|

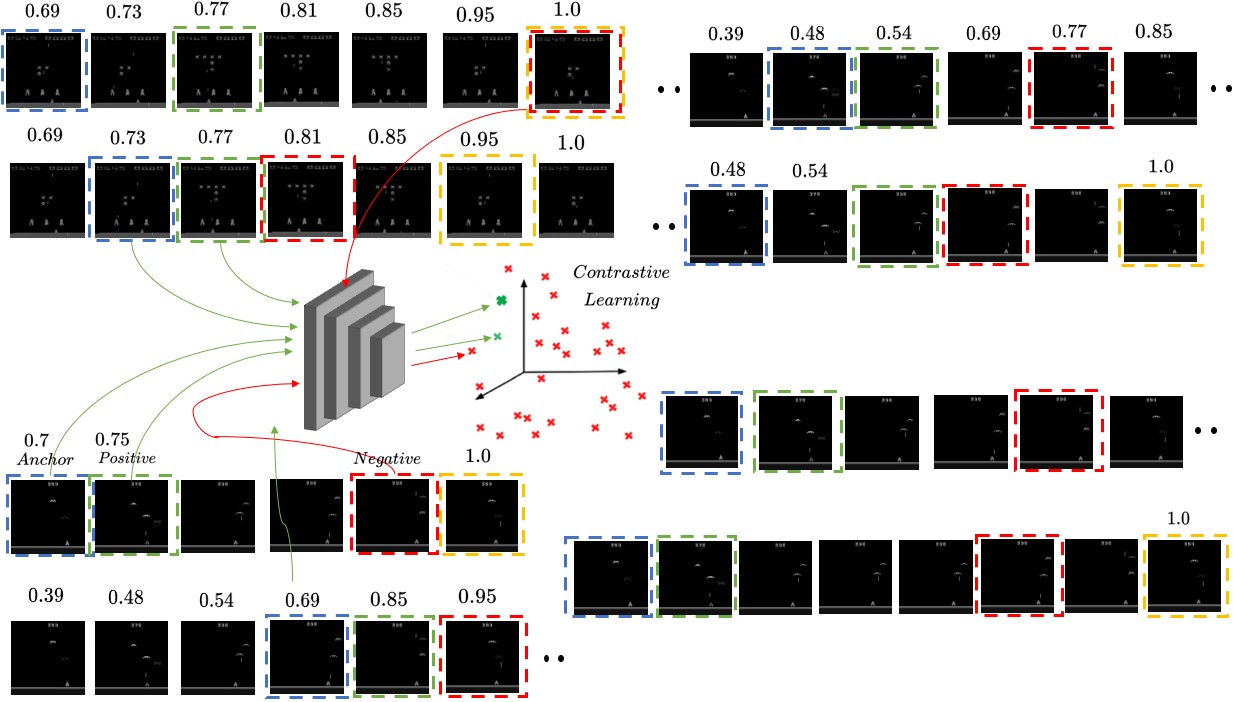

Value Explicit Pretraining for Learning Transferable Representations

Kiran Lekkala, Henghui Bao, Sumedh Anand Sontakke, Erdem Biyik, Laurent Itti In submission to RA-L Value Explicit Pretraining (VEP) is a method for transfer reinforcement learning that pre-trains an objective-conditioned encoder invariant to environment changes, using contrastive loss and Bellman return estimates to create temporally smooth, task-focused representations, achieving superior generalization and sample efficiency on realistic navigation and Atari benchmarks. |

|

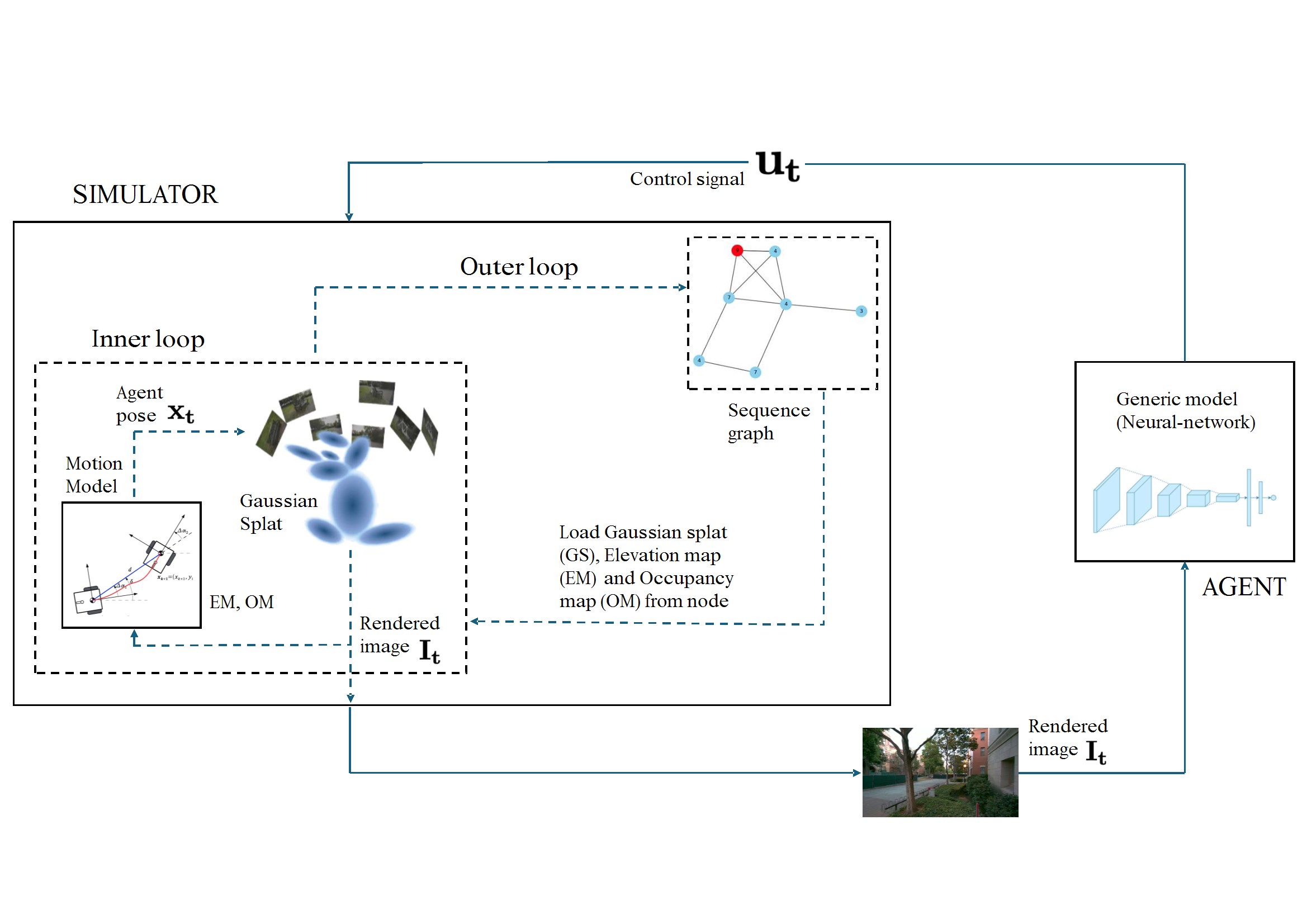

Real-world Visual Navigation in a Simulator: A New Benchmark

Henghui Bao*, Kiran Lekkala*, Laurent Itti CVPR Workshop POETS, 2024 Beogym is a data-driven simulator leveraging Gaussian Splatting and a large-scale outdoor dataset to render realistic first-person imagery with smooth transitions, enabling advanced training and evaluation of autonomous agents for visual navigation. |

ServicesConference reviewer: CoRL 2024 Workshop XE |

|

Template from template. |